I’ve had a few people ask me about this so, I figured I would write an article on what I used to do it.

Parts List:

Zigbee/Zwave Controller: I utilize a SmartThings hub ( now Aeotec ) but you can use anything that is compatible with Zigbee/Zwave if you already have a hub.

RGBW Controller: I used a monoprice 136511 but it looks like maybe they aren’t making these any more or are sold out? Any RGBW controller that is Z-Wave or Zigbee will work, just make sure it supports enough amperage to power your LED strips, depending on length, this can be up to 20 Amps.

LED Power Supply: Now this depends on your LED length and voltage, I used 12VDC 16′ light sections from Amazon for the big ceiling, these are three of those strips connected together. As such I need quite a bit of amperage at 12VDC, so I chose a hard wired PSU which I added a cooling fan to the top of.

For our shorter runs, we used standard power brick style PSUs. Although you could use this style for both, it has enough power capability.

LED Strips: I chose generic RGBWW ( Warm White ) LED strips that are pretty cheap with an adhesive backing to them.

Installation:

Installation is fairly simple if you hare hand and know basic electrical skills.

Power supply to the RGBW controller, controller to the LED light strip(s).

Generally, once the controller is powered the hub you have will see it and you’ll be able to adopt it and it basically just works from there, I’m not going to go into detail on this step because the variations depending upon your devices is limitless but, as long as they speak the same protocol(s): Z-Wave or Zigbee, you should have no issues.

We used multiple controllers, one for each set of LEDs so that they could be controlled individually, with things like SmartThings and Alexa you can group them so if you want them to change together they can, or they can be told to change independently, the flexibility is yours.

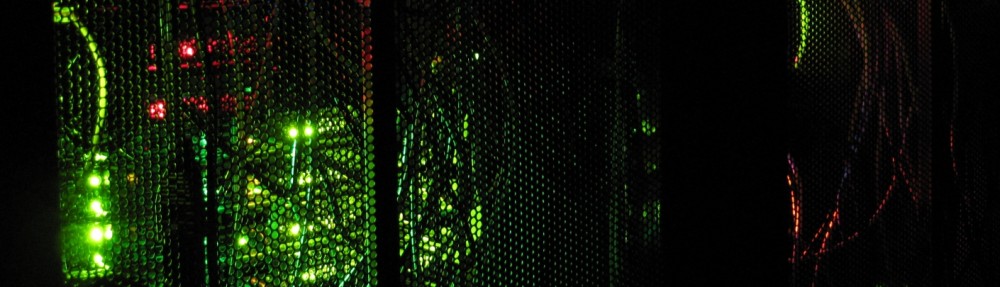

We have fantastic ceilings that have a great place to hide the lights behind some crown moulding, and we couldn’t be happier with the results, this is 2-years into these being in service and being used every day and they all still work perfectly:

The green around the fan is one controller, and one adjoined long set of RGBWW LEDs.

The red around the TV and above it are another set of two LED strips connected with a longer extension but controlled by the same controller.

The white gap on the left above the fireplace is our dining room on another controller. We also have started adding them underneath our cabinets in the kitchen although that project has stalled a bit lol.