So this is an issue on this house that has been around since we bought the house and frankly I should have fixed it a long time ago but, here we are… one of those low items on the list as it only presents an issue every year or so and the duration it takes to resolve it is significantly less than a permanent fix.. At any rate, I made a video so that people can understand that if you add a garbage disposal, take care in understanding your drain elevations so you know to either expect this issue or, far preferably; avoid it.

Category Archives: Opensource

OpenNMS / PagerDuty

With the release of OpenNMS 30, we found that the PagerDuty plugin was broken.

Issue #9 was opened on Jul 20 to address the error:

Error executing command: Unable to resolve root: missing requirement [root] osgi.identity; osgi.identity=opennms-plugins-pagerduty; type=karaf.feature; version="[0.1.3,0.1.3]"; filter:="(&(osgi.identity=opennms-plugins-pagerduty)(type=karaf.feature)(version>=0.1.3)(version<=0.1.3))" [caused by: Unable to resolve opennms-plugins-pagerduty/0.1.3: missing requirement [opennms-plugins-pagerduty/0.1.3] osgi.identity; osgi.identity=org.opennms.plugins.pagerduty-plugin; type=osgi.bundle; version="[0.1.3,0.1.3]"; resolution:=mandatory [caused by: Unable to resolve org.opennms.plugins.pagerduty-plugin/0.1.3: missing requirement [org.opennms.plugins.pagerduty-plugin/0.1.3] osgi.wiring.package; filter:="(&(osgi.wiring.package=org.opennms.integration.api.v1.alarms)(version>=0.5.0)(!(version>=1.0.0)))"]]

The devs did update the master tree a few weeks ago to accommodate the changes for OpenNMS 30, however it still does not build. I have forked the project and made one small change and it compiles and works.

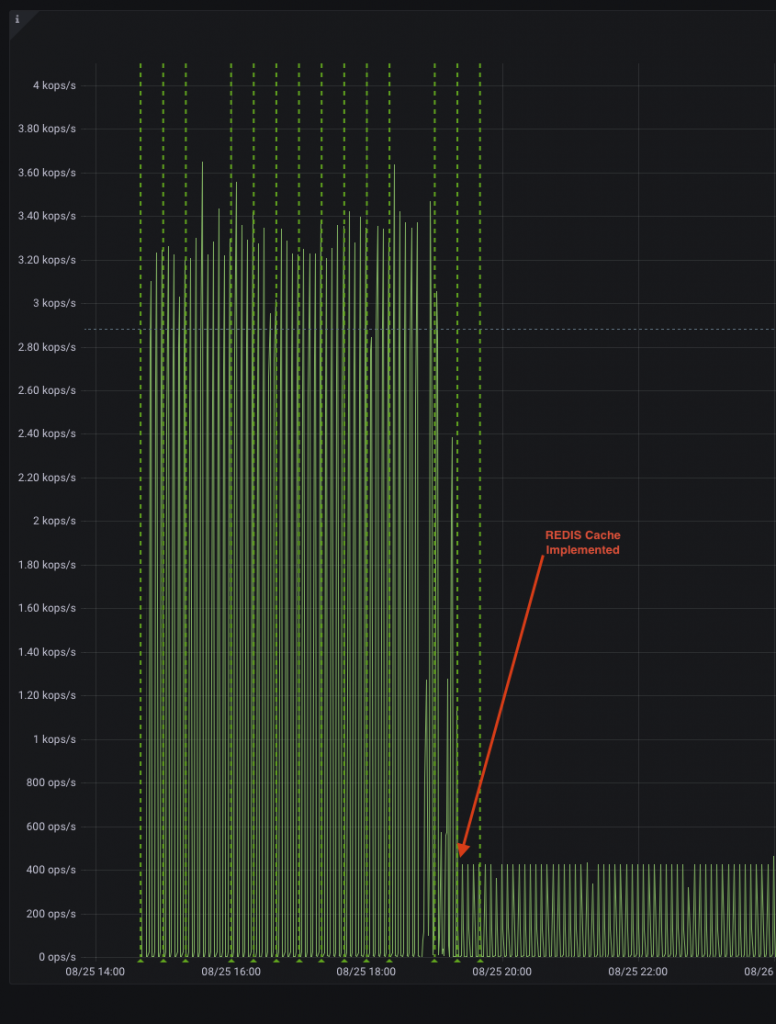

Scylla / OpenNMS NewTS? – Use a REDIS cache

We noticed that our larger hosts, specifically PoP routers with thousands of interfaces were having intermittent resource graphing. This seemed strange since we have a Scylla backend we are using with NewTS that has gobs of resources. The Horizon server is also fine resource wise, well as it turns out, implementing the REDIS cache for OpenNMS/Horizon makes a world of difference.

In our case we went from ~3600/sec queries against the Scylla cluster to ~450/sec and all graphing gaps went away. Also viewing resource graphs got faster. It would appear that the internal Cache in Horizon may just not be powerful enough and is not very efficient when compared to REDIS.

OpenNMS Email Notifications

Lately I’ve been facing an issue where some hosts that are only supposed to be notified after a 10 minute outage, due to them being LTE devices, they drop a lot, and we really don’t need to know about it until they’ve been down for a while, then we can take action.

Well we tried to exclude these from our catch-all nodeDown filter, all our LTE devices are in RFC1918 10.10.X.X space, so we created a filter on our global rule to exclude them:

!(IPADDR IPLIKE 10.10.*.*)

The inverse of this (IPADDR IPLIKE 10.10.*.*) works just fine on our 10m delay filter.

Well it turns out even if you are not monitoring an IP, and that IP exists on the host, it will try and match it. This is a big problem, it means you have to either explicitly list all the IPs that you want to EXCLUDE or, this code needs to be changed to only look at monitored IPs. I think a isManaged filter should be added on the SQL query. At any rate, if you’re hitting mystery notification, that don’t show up in the validate list, that is why.

OpenNMS Horizon 30 Update / 503 / Karaf Failure

When upgrading to OpenNMS Horizon 30, you may find that even after following standard upgrade procedures it will produce a HTTP/503 for you. Meaning Jetty started but… Karaf is dead. This appears to be another *finger guns* gotcha by the OpenNMS team for the non-paid product.

You must MANUALLY update your config.properties file in opennms/etc to update the reference for Felix that was upgraded from 6.0.4 to 6.0.5;

config.properties:karaf.framework.felix=mvn\:org.apache.felix/org.apache.felix.framework/6.0.4

to

config.properties:karaf.framework.felix=mvn\:org.apache.felix/org.apache.felix.framework/6.0.5

Wiki.JS docker-compose w/ postgres persistent storage via NFS and Traefik

Here’s an example of our docker-file for Wiki.JS with NFS DB storage, Postgres and Traefik;

version: "3"

volumes:

db-data:

driver_opts:

type: "nfs"

o: addr=nfshost.example.com,nolock,soft,rw

device: ":/mnt/Pool0/WikiJS"

services:

db:

image: postgres:11-alpine

environment:

POSTGRES_DB: wiki

POSTGRES_PASSWORD: Supersecurepassword

POSTGRES_USER: wikijs

command: postgres -c listen_addresses='*'

logging:

driver: "none"

restart: unless-stopped

networks:

- internal

labels:

- traefik.enable=false

volumes:

- type: volume

source: db-data

target: /var/lib/postgresql/data

volume:

nocopy: true

wiki:

image: ghcr.io/requarks/wiki:2

depends_on:

- db

environment:

DB_TYPE: postgres

DB_HOST: db

DB_PORT: 5432

DB_USER: wikijs

DB_PASS: Supersecurepassword

DB_NAME: wiki

restart: unless-stopped

networks:

- proxy

- internal

labels:

- "traefik.enable=true"

- "traefik.docker.network=proxy"

- "traefik.http.routers.ex-wikijs.entrypoints=https"

- "traefik.http.routers.ex-wikijs.rule=Host(`wikijs.example.com`)"

- "traefik.http.services.ex-wikijs.loadbalancer.server.port=3000"

networks:

proxy:

external: true

internal:

external: false

This stands up a postgres instance using the NFS mount as storage, allows the internal network to connect to it, stands up a wiki.js instance and gets it all going for you. All behind a Traefik proxy.